Stochastic Processes - part 6

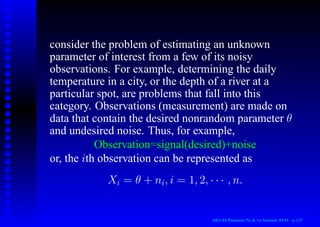

- 1. consider the problem of estimating an unknown parameter of interest from a few of its noisy observations. For example, determining the daily temperature in a city, or the depth of a river at a particular spot, are problems that fall into this category. Observations (measurement) are made on data that contain the desired nonrandom parameter θ and undesired noise. Thus, for example, Observation=signal(desired)+noise or, the ith observation can be represented as Xi = θ + ni, i = 1, 2, · · · , n. AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.1/27

- 2. Here θ represents the unknown nonrandom desired parameter, and ni, i = 1, 2, · · · , n represent ran- dom variables that may be dependent or independent from observation to observation. Given n observations {x1, x2, · · · , xn} the estimation problem is to obtain the “best” estimator for the unknown parameter θ in terms of these observations. Let us denote by θ̂(x) the estimator for θ. Obviously θ̂(x) is a function of only the observations. “Best estimator” in what sense? Var- ious optimization strategies can be used to define the term “best”. AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.2/27

- 3. Ideal solution would be when the estimate θ̂(x) coincides with the unknown θ. This of course may not be possible, and almost always any estimate will result in an error given by e = θ̂(x) − θ One strategy would be to select the estimator θ̂(x) so as to minimize some function of this error - such as - minimization of the mean square error (MMSE), or minimization of the absolute value of the error etc. A more fundamental approach is that of the principle of Maximum Likelihood (ML). AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.3/27

- 4. The underlying assumption in any estimation problem is that the available data has something to do with the unknown parameter θ. More precisely, we assume that the joint PDF of given by fX(x1, x2, · · · , xn; θ) depends on θ. The method of maximum likelihood assumes that the given sample data set is representative of the population fX(x1, x2, · · · , xn; θ) and chooses that value for θ that most likely caused the observed data to occur, i.e., once observations {x1, x2, · · · , xn} are given, AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.4/27

- 5. fX(x1, x2, · · · , xn; θ) is a function of θ alone, and the value of θ that maximizes the above PDF is the most likely value for θ, and it is chosen as the ML estimate for θ̂ML(x) for θ (Figure 2). θ̂ML(x) fX(x1,··· ,xn;θ) θ Figure 1: ML principle AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.5/27

- 6. Given {x1, x2, · · · , xn} the JPDF fX(x1, · · · , xn; θ) represents the likelihood function, and the ML estimate can be determined either from the likelihood equation sup θ̂ML fX(x1, · · · , xn; θ) or using the log-likelihood function (sup represents the supremum operation) L(x1, x2, · · · , xn; θ) = log fX(x1, x2, · · · , xn; θ). AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.6/27

- 7. If L(x1, x2, · · · , xn; θ) is differentiable and a supremum θ̂ML exists, then that must satisfy the equation ∂ log fX(x1, x2, · · · , xn; θ) ∂θ

- 11. θ=θ̂ML = 0. AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.7/27

- 12. Example: Let Xi = θ + wi, i = 1, 2, · · · , n represent n observations where θ is the unknown parameter of interest, and wi are zero mean independent normal RVs with common variance. Determine the ML estimate for θ. Solution: Since wi are independent RVs and θ is an unknown constant, we have Xis are independent normal random variables. AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.8/27

- 13. Thus the likelihood function takes the form fX(x1, x2, · · · , xn; θ) = n Y i=1 fXi (xi; θ). fXi (xi; θ) = 1 √ 2πσ2 e−(xi−θ)2 /2σ2 . fX(x1, x2, · · · , xn; θ) = 1 (2πσ2)n/2 e − n P i=1 (xi−θ)2 /2σ2 . AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.9/27

- 14. L(X; θ) = ln fX(x1, x2, · · · , xn; θ) = n 2 ln(2πσ2 ) − n X i=1 (xi − θ)2 2σ2 , ∂ ln fX(x1, x2, · · · , xn; θ) ∂θ

- 23. θ=θ̂ML =0, (1) θ̂ML(X) = 1 n n X i=1 Xi. (2) AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.10/27

- 24. E[θ̂ML(x)] = 1 n n X i=1 E(Xi) = θ, the expected value of the estimator does not differ from the desired parameter, and hence there is no bias between the two. Such estimators are known as unbiased estimators. Moreover the variance of the estimator is given by V ar(θ̂ML) = E[(θ̂ML − θ)2 ] = 1 n2 E ( n P i=1 Xi − θ 2 ) = 1 n2 ( n P i=1 E(Xi − θ)2 + n P i=1 n P j=1,i6=j E(Xi − θ)(Xj − θ) ) AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.11/27

- 25. V ar(θ̂ML) = 1 n2 n X i=1 V ar(Xi) = nσ2 n2 = σ2 n . V ar(θ̂ML) → 0, as n → ∞, another desired property. We say such estimators are consistent estimators. Next two examples show that ML estimator can be highly nonlinear. AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.12/27

- 26. Example: Let X1, X2, · · · , Xn be independent, identically distributed uniform random variables in the interval (0, θ) with common PDF fXi (xi; θ) = 1 θ , 0 xi θ, where θ is an unknown parameter. Find the ML esti- mate for θ. AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.13/27

- 27. Solution: fX(x1, x2, · · · , xn; θ) = 1 θn , 0 xi 6 θ, i = 1, · · · , n = 1 θn , 0 6 max(x1, x2, · · · , xn) 6 θ. the likelihood function in this case is maximized by the minimum value of θ, and since θ max(X1, X2, · · · , Xn), we get θ̂ML(X) = max(X1, X2, · · · , Xn) (3) AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.14/27

- 28. represents a nonlinear function of the observations. To determine whether (3) represents an unbiased estimate for θ, we need to evaluate its mean. To accomplish that in this case, it is easier to determine its PDF and proceed directly. Z = max(X1, X2, · · · , Xn) FZ(z) = P[max(X1, X2, · · · , Xn) 6 z] = P(X1 6 z, X2 6 z, · · · , Xn 6 z) = n Y i=1 P(Xi 6 z) = n Y i=1 FXi (z) = z θ n , 0 z θ, fZ(z) = nzn−1 θn , 0 z θ, 0, AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.15/27

- 29. E[θ̂ML(X)] = E(Z) = Z θ 0 zfZ(z)dz = θ (1 + 1/n) . E[θ̂ML(X)] 6= θ, hence the ML estimator is not an unbiased estimator for θ. However, as n → ∞ lim n→∞ E[θ̂ML(X)] = lim n→∞ θ (1 + 1/n) = θ, ML is an asymptotically unbiased estimator. AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.16/27

- 30. E(Z2 ) = Z θ 0 z2 fZ(z)dz = n θn Z θ 0 zn+1 dz = nθ2 n + 2 V ar[θ̂ML(X)] = E(Z2 ) − [E(Z)]2 = nθ2 n + 2 − n2 θ2 (n + 1)2 = nθ2 (n + 1)2(n + 2) . Hence, we have a consistent estimator. AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.17/27

- 31. Example 12.3: Let {X1, X2, · · · , Xn} be IID Gamma random variables with unknown parameters α and β. Determine the ML estimator for α and β. Solution: xi 0 fX(x1, x2, · · · , xn; α, β) = βnα (Γ(α))n n Y i=1 xα−1 i e −β n P i=1 xi . L(x1, x2, · · · , xn; α, β) = log fX(x1, x2, · · · , xn; α, β) = nα log β−n log Γ(α)+(α−1) n X i=1 log xi ! −β n X i=1 xi. AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.18/27

- 32. ∂L ∂α = n log β − n Γ(α) Γ′ (α) + n X i=1 log xi

- 42. α,β=α̂,β̂ = 0. β̂ML(X) = α̂ML 1 n n P i=1 xi , AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.19/27

- 43. log α̂ML − Γ′ (α̂ML) Γ(α̂ML) = log 1 n n X i=1 xi ! − 1 n n X i=1 xi. This is a highly nonlinear equation in α̂ML. In general the (log)-likelihood function can have more than one solution, or no solutions at all. Further, the (log)-likelihood function may not be even differen- tiable, or it can be extremely complicated to solve ex- plicitly AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.20/27

- 44. Cramer - Rao Bound: Variance of any unbiased estimator based on observations {x1, x2, · · · , xn} for θ must satisfy the lower bound V ar(θ̂) 1 E ∂ ln fX(x1,x2,··· ,xn;θ) ∂θ 2 (4) = −1 E ∂2 ln fX(x1,x2,··· ,xn;θ) ∂θ2 . (5) AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.21/27

- 45. This important result states that the right side of above equation acts as a lower bound on the variance of all unbiased estimator for θ, provided their JPDF satis- fies certain regularity restrictions. Naturally any un- biased estimator whose variance coincides with that in (4), must be the best. There are no better solutions! Such estimates are known as efficient estimators. AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.22/27

- 46. For this purpose, let’s consider (1,2). ∂ ln fX(x1, x2, · · · , xn; θ) ∂θ 2 = 1 σ4 n X i=1 (Xi − θ) !2 ; E ∂ ln fX(x1, x2, · · · , xn; θ) ∂θ 2 = 1 σ4 n X i=1 E[(Xi − θ)2 ] + n X i=1 n X j=1,i6=j E[(Xi − θ)(Xj − θ)] AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.23/27

- 47. = 1 σ4 n X i=1 σ2 = n σ2 , ⇒ Var(θ̂) σ2 n ⇒ An efficient estimator. AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.24/27

- 48. So far, we discussed nonrandom parameters that are unknown. What if the parameter of interest is a RV with a-priori PDF f(θ). How does one ob- tain a good estimate for θ based on the observations {x1, x2, · · · , xn}? AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.25/27

- 49. One technique is to use the observations to compute its a-posteriori probability density function Of course, we can use the Bayes’ theorem to obtain this a-posteriori PDF. This gives fθ|X(θ|x1, · · · , xn) = fX|θ(x1, · · · , xn|θ)fθ(θ) fX(x1, · · · , xn) . (6) AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.26/27

- 50. θ is the variable in (6), given {x1, x2, · · · , xn} the most probable value of θ suggested by the above a-posteriori PDF Naturally, the most likely value for θ is that corresponding to the maximum of the a-posteriori PDF. This estimator - maximum of the a-posteriori PDF is known as the MAP estimator for θ. It is possible to use other optimality criteria as well. Of course, that should be the subject matter of another course! θ̂MAP (x) fX(θ|x1,··· ,xn) θ Figure 2: MAP principle AKU-EE/Parameter/HA, 1st Semester, 84-85 – p.27/27