Simple Framework for Contrastive Learning Visual Representations

- 1. A Simple Framework for Contrastive Learning ofVisual Representations Ting Chen, et al., “A Simple Framework for Contrastive Learning of Visual Representations” 8th March, 2020 PR12 Paper Review JinWon Lee Samsung Electronics

- 2. References • The Illustrated SimCLR Framework https://amitness.com/2020/03/illustrated-simclr/ • Exploring SimCLR: A Simple Framework for Contrastive Learning of Visual Representations https://towardsdatascience.com/exploring-simclr-a-simple-framework-for- contrastive-learning-of-visual-representations-158c30601e7e • SimCLR: Contrastive Learning ofVisual Representations https://medium.com/@nainaakash012/simclr-contrastive-learning-of-visual- representations-52ecf1ac11fa

- 3. Introduction • Learning effective visual representations without human supervision is a long-standing problem. • Most mainstream approaches fall into one of two classes: generative or discriminative. Generative approaches – pixel level generation is computationally expensive and may not be necessary for representation learning. Discriminative approaches learn representations using objective function like supervised learning but pretext tasks have relied on somewhat ad-hoc heuristics, which limits the generality of learn representations.

- 4. Contrastive Learning – Intuition

- 5. Contrastive Learning • Contrastive methods aim to learn representations by enforcing similar elements to be equal and dissimilar elements to be different.

- 6. Contrastive Learning – Data • Example pairs of images which are similar and images which are different are required for training a model Images from “The Illustrated SimCLR Framework”

- 7. Supervised & Self-supervisedApproach Images from “The Illustrated SimCLR Framework”

- 8. Contrastive Learning – Representstions Images from “The Illustrated SimCLR Framework”

- 9. Contrastive Learning – Similarity Metric Images from “The Illustrated SimCLR Framework”

- 10. Contrastive Learning – Noise Contrastive Estimator Loss • x+ is a positive example and x- is a negative example • sim(.) is a similarity function • Note that each positive pair (x,x+) we have a set of K negatives

- 11. SimCLR

- 12. SimCLR – Overview • A stochastic data augmentation module that transforms any given data example randomly resulting in two correlated views of the same example. Random crop and resize(with random flip), color distortions, and Gaussian blur • ResNet50 is adopted as a encoder, and the output vector is from GAP layer. (2048-dimension) • Two layer MLP is used in projection head. (128-dimensional latent space) • No explicit negative sampling. 2(N-1) augmented examples within a minibatch are used for negative samples. (N is a batch size) • Cosine similarity function is a used similarity metric. • Normalized temperature-scaled cross entropy(NT-Xent) loss is used.

- 13. SimCLR - Overview • Training Batch size : 256~8192 A batch size of 8192 gives 16382 negative examples per positive pair from both augmentation views. To stabilize the training, LARS optimizer is used. Aggregating BN mean and variance over all devices during training. With 128TPU v3 cores, it takes ~1.5 hours to train ResNet-50 with a batch size of 4096 for 100 epochs • Dataset – ImageNet 2012 dataset • To evaluate the learned representations, linear evaluation protocol is used.

- 14. Step by Step Example Images from “The Illustrated SimCLR Framework”

- 15. Step by Step Example Images from “The Illustrated SimCLR Framework”

- 16. Step by Step Example Images from “The Illustrated SimCLR Framework”

- 17. Step by Step Example Images from “The Illustrated SimCLR Framework”

- 18. Step by Step Example Images from “The Illustrated SimCLR Framework”

- 19. Step by Step Example Images from “The Illustrated SimCLR Framework”

- 20. Step by Step Example Images from “The Illustrated SimCLR Framework”

- 21. Step by Step Example Images from “The Illustrated SimCLR Framework”

- 22. Step by Step Example Images from “The Illustrated SimCLR Framework”

- 23. Step by Step Example Images from “The Illustrated SimCLR Framework”

- 24. Data Augmentation for Contrastive Representation Learning • Many existing approaches define contrastive prediction tasks by changing architecture. • The authors use only simple data augmentation methods, this simple design choice conveniently decouples the predictive task from other components such as the NN architecture.

- 25. Data Augmentation for Contrastive Representation Learning Augmentations in the red boxes are used

- 26. Linear Evaluation under Individual or Composition of Data Augmentation

- 27. Evaluation of Data Augmentation • Asymmetric data transformation method is used. Only one branch of the frame work is applied the target transformation(s). • No single transformation suffices to learn good representations, even though the model can almost perfectly identify the positive pairs. • Random cropping and random color distortion stands out When using only random cropping as data augmentation is that most patches from an image share a similar color distortion

- 28. Contrastive Learning Needs Stronger Data Augmentation • Stronger color augmentation substantially improves the linear evaluation of the learned unsupervised models. • A sophisticated augmentation policy(such as AutoAugment) does not work better than simple cropping + (stronger) color distortion • Unsupervised contrastive learning benefits from stronger (color) data augmentation than supervised learning. • Data augmentation that does not yield accuracy benefits for supervised learning can still help considerably with contrastive learning.

- 29. Unsupervised Contrastive Learning Benefits from Bigger Models • Unsupervised learning benefits more from bigger models than its supervised counter part.

- 30. Nonlinear Projection Head • Nonlinear projection is better than a linear projection(+3%) and much better than no projection(>10%)

- 31. Nonlinear Projection Head • The hidden layer before the projection head is a better representation than the layer after. • The importance of using the representation before the nonlinear projection is due to loss of information induced by the contrastive loss. In particular z = g(h) is trained to be invariant to data transformation.

- 32. Loss Function • l2 normalization along with temperature effectively weights different examples, and an appropriate temperature can help the model learn from hard negatives. • Unlike cross-entropy, other objective functions do not weigh the negatives by their relative hardness.

- 33. Larger Batch Size and LongerTraining • When the training epochs is small, larger batch size have a significant advantage. • Larger batch sizes provide more negative examples, facilitating convergence, and training longer also provides more negative examples, improving the results.

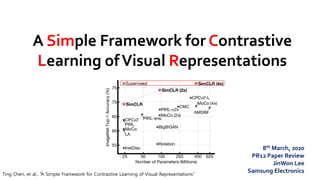

- 34. Comparison with SOTA – Linear Evaluation

- 35. Comparison with SOTA – Semi-supervised Learning

- 38. Appendix – Effects of LongerTraining for Supervised Learning • There is no significant benefit from training supervised models longer on ImageNet. • Stronger data augmentation slightly improves the accuracy of ResNet-50 (4x) but does not help on ResNet-50.

- 39. Appendix – CIFAR-10 Dataset Results

- 40. Conclusion • SimCLR differs from standard supervised learning on ImageNet only in the choice of data augmentation, the use of nonlinear head at the end of the network, and the loss function. • Composition of data augmentations plays a critical role in defining effective predictive tasks. • Nonlinear transformation between the representation and the contrastive loss substantially improves the quality of the learned representations. • Contrastive learning benefits from larger batch sizes and more training steps compared to supervised learning. • SimCLR achieves 76.5% top-1 accuracy, which is a 7% relative improvement over previous state-of-the-art, matching the performance of a supervised ResNet-50.