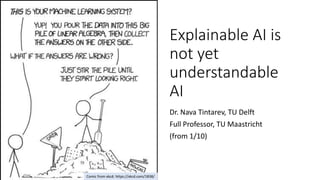

Explainable AI is not yet Understandable AI

- 1. Explainable AI is not yet understandable AI Dr. Nava Tintarev, TU Delft Full Professor, TU Maastricht (from 1/10) Comic from xkcd: https://xkcd.com/1838/

- 2. 2 Roadmap Motivation + terminology Explanations in recommender systems What: Levels of explanation When: Adapting explanations Take home

- 3. Explanations in Machine learning? INSPECT DON’T INSPECT 3 Masters thesis: Paul Bakker, TUD

- 4. [19] departments pledge to be publicly transparent about how decision- making is driven by algorithms, including giving “plain English” explanations; to make available information about the processes used and how data is stored unless forbidden by law (such as for reasons of national security); and to identify and manage biases informing algorithms. Guardian 27th of July 2020 4

- 5. Researchers • focus on understandability and explainability Citizens • want to see and correct their information Decision makers • want accountability (both for data and algorithms). The Guardian, Feb 5th 2020 28th July 2020, Het Parool 5 Marleen Huysman, https://cacm.acm.org/news/246457-the- impact-of-ai-on-organizations/fulltext, July 2020

- 6. • HR professionals became assistants to the algorithm instead. • HR professionals do not select or reject candidates anymore, • but supply the algorithm with fresh data so it can make the decision on their behalf. • Furthermore, they need to repair mistakes made by the algorithm and act as its intermediary… Marleen Huysman, https://cacm.acm.org/news/246457-the-impact-of-ai- on-organizations/fulltext, July 2020 • Predictive policing by the Dutch police. • We discovered that the interpretation and filtering of the AI outputs was too difficult to leave to the police officers themselves. • To solve this problem, the police set up an intelligence unit which translates the AI outputs into what police officers must actually do. 6

- 7. Justification and Explanation a justification explains why a decision is a good one, without explaining exactly how it was made. Biran et al. 7 cartoon by Erwin Suvaal from CVIII ontwerpers

- 8. Purpose Description Transparency Explain how the system works Effectiveness Help users make good decisions Trust Increase users' confidence in the system Persuasiveness Convince users to try or buy Satisfaction Increase the ease of use or enjoyment Scrutability Allow users to tell the system it is wrong Efficiency Help users make decisions faster Tintarev and Masthoff. 2007 ExplanationsinRecommenderSystems 8

- 9. Interpretability Interpretability is • the degree to which a human can understand the cause of a decision (Miller 2017) • the degree to which a human can consistently predict the model’s result (Kim et al 2016) 9

- 11. Decision tree/Anchors? Ribeiro, Marco Tulio, Sameer Singh, and Carlos Guestrin. "Anchors: High-precision model-agnostic explanations." Thirty-Second AAAI Conference on Artificial Intelligence. 2018. 11 Close to natural language Limited expressivity, manual crafting, does not scale

- 12. Example: Argumentation Explanation Cerutti et al, ECAI2014 12 Clear structure. NL analog not evident Human judgment not consistent with formal reasoning

- 13. Local models (LIME) 13 Simpler than full model Local model not fully representative/non-deterministic Many of same issues as decision trees (scale, interactions) https://github.com/marcotcr/lime

- 14. SHAP Local accuracy, missingness, proportionality, feature interactions Visualizations Data scientists were found to over-trust and misuse local simplified (``interpretable'') machine learning models such as GAM and SHAP (Kaur et al, CHI 2020) 14

- 15. Explanations in Machine learning? INSPECT DON’T INSPECT 15 Masters thesis: Paul Bakker, TUD

- 17. Information overload. Too many: movies, ads, webpages, songs, plumbers, etc. Searching is difficult What’s good, what’s not? Benefits of recommendation 17

- 19. What is a recommender system? Systems that make personalized recommendations of goods, services, and people (Kautz) • User identifies one or more objects as being of interest • The recommender system suggests other objects that are similar (infers liking) • Ranking and filtering algorithm • Ranks the options, filters out lower ranking options 19 1 2 3 4

- 20. Explaining recommender systems Your recommendationsYour music profile 20

- 22. Levels of explanation (Sullivan et al. 2019) Level Answers the question Individual “What is my interaction with the system so that I can understand my past?” Contextualization ”How does my interaction compare with the average so I can understand me within my community” Self-actualization ”How can I use the system so I can reach my goals?” 22

- 23. Individual 23 Unfortunately, this movie belongs to at least one genre you do not want to see: Action & Adventure. It also belongs to the genre(s): Comedy. This movie stars Jo Marr and Robert Redford. Tintarev & Masthoff 2008 Cataldo Musto et al UMAP 2019

- 24. Contextualization 24 Photo by Ryoji Iwata on Unsplash

- 25. Explanation-aware interfaces Tintarev et al, SAC, 2018 25

- 26. Explanations for groups Tran et al, UMAP’2019 26

- 28. Hoptopics results • better sense of control and transparency • participants had a poor mental model for the degree of novel content for non-personalized data in the Inspectable interface • Relevance and novelty assessment interlinked Photo by Alina Grubnyak on Unsplash28

- 29. Photo by Jared Rice on Unsplash Self-actualization 29

- 30. Self-actualisation • Few studies have tried to connect transparency and explanation with certain personal or societal values and goals • It is goal-directed and allows for user-control to achieve those goals • The user has direct control over which goal they want to explore, and the recommendations that result from the chosen goal I want to be an expert I want to stay informed I want to broaden my horizon I want discover the unexplored 30

- 31. Challenge • Users want personalized content • Increased engagement, satisfaction • Users care for explainability & transparency • Why is an item recommended? • What personal data is stored? • How do recommenders work? 31

- 32. Challenge • Journalists value journalistic independence. • Journalists want their articles to be read. • Journalists value their editorial choices. • What is important for users to read 32

- 33. Data • One month of real data of reading behavior of users of fd.nl, of the Dutch Het Financieele Dagblad • 100 user profiles • 50 average reading activity • 50 highly active • 4 maximally separated profiles for word clouds • 1600 articles • Metadata • Topic tags for articles (e.g. politics, economy) • Word2vec features 33

- 34. User study: Topic scoring Familiarity User’s familiarity score with a topic: the ratio of articles read by a user on a topic, over the total number of articles published on that topic. Similarity The similarity between topics in the user profile is the cosine similarity of aggregated word embeddings.

- 36. 36 User study: Goals Your goal is to Broaden your Horizons. There may be topics you do not normally read about, but you may actually find interesting. Exploring this helps to build a broad perspective on the issues that matter to you. Your goal is to Discover the Unexplored. There may be topics that you haven’t explored before that may actually become new interests. Exploring new topics can promote creativity and objectivity.

- 37. Results of setting goals Broaden: more familiar topics selected (than discover the unexplored). Providing users with different goals influences their reading intentions. Discover could lead to more new topics read. Discover: fewer familiar in selected (compared to unselected) Can encourage readers to explore new topics beyond topics they normally read using this goal, but they may be related to topics they already read. Both: high variance of similarity for selected topics compared to unselected Either goal encourages readers to read completely unrelated topics. Needs more study. 37 (Sullivan et al. 2019)

- 38. Case study: Blendle 38 Mats Mulder, MSc, 2020 Thumbnail 3.1% more Click-through rate for “diverse viewpoint” condition depended on: Number of hearts Interface matters

- 39. Reminder: Levels of explanation (Sullivan et al. 2019) Level Answers the question Individual “What is my interaction with the system so that I can understand my past?” Contextualization ”How does my interaction compare with the average so I can understand me within my community” Self-actualization ”How can I use the system so I can reach my goals?” 39

- 40. Photos by Jessica Rockowitz Helena Lopes Fitsum Admasu and Priscilla Du Preez on Unsplash When to explain 40

- 41. EXPLANATION EFFECTIVENESS Factors influencing effectiveness Knijnenburg & Willemsen, 2018 41

- 42. Explanation effectiveness Situational Characteristics? Perceptions of diversity (Ferwerda2017, Kang2016, Willemsen2011) Group recommendations (Najafian2018, Kapcak2018) Personal Characteristics? • Working memory (Conati2014, Tintarev2016, Tocker2012, Velex2005) • Expertise (Kobsa2001, Shah2011, Tocker2012) 42

- 44. Research questions • How do personal characteristics influence the impact of… • RQ1: user controls in terms of diversity and acceptance? • RQ2: visualizations in terms of diversity and acceptance? • RQ3: visualizations + user controls in terms of diversity and acceptance? 44 Katrien Verbert Associate Professor Yucheng Jin PhD researcher Jin et al 2018: UMAP and Recsys, UMUAI 2019

- 45. Expertise • Example: Goldsmith’s Music sophistication Index: • Active Musical Engagement, e.g. how much time and money resources spent on music • Self-reported Perceptual Abilities, e.g. accuracy of musical listening skills • Musical Training, e.g. amount of formal musical training received • Self-reported Singing Abilities, e.g. accuracy of one’s own singing • Sophisticated Emotional Engagement with Music, e.g. ability to talk about emotions that music expresses 45

- 46. Cognitive traits • Perceptual speed - quickly and accurately compare letters, numbers, objects, pictures, or patterns • Verbal working memory – temporary memory for words and verbal items • Visual working memory – temporary memory for visual items 46

- 47. 47

- 48. • User control: Musical sophistication influenced the acceptance of recommendations for user controls. • Visualization: • Musical sophistication also influenced perceived diversity for visualizations, but only for one of the studied visualizations. • Visual memory interacted with visualizations for perceived diversity, but only for one of the studied visualizations. Jin et al. UMUAI 2019 Adapting to users: personal characteristics 48

- 49. Situational characteristics Examples: • Location • Time of day • Who else is there • Previous interactions Stress levels e.g., sensors or survey such as NASA TLX. • Mental Demand • Physical Demand • Temporal Demand • Performance • Effort • Frustration 49

- 50. Situational: location, activity Jin et al UMAP 2019 How do controls for contextual characteristics (mood, weather, time of day, and social) influence user perceptions of the system? How do different scenarios (location, activity) influence user requirements for control of music recommendations? 50

- 51. Situational: groups How to recommend items to groups when no best option (for all) exist? PhD: Shabnam Najafian (Najafian & Tintarev 2018, Najafian et al 2018) 51

- 52. Situational: groups Najafian et al. IUI’ 2020 Low consensus scenarios: Unhappy user Unhappy acquaintances High consensus scenario: Baseline

- 53. Transparency versus privacy The scenario influences people’s need for privacy. Mostly users avoided selecting the combination of name and personality. When the “minority” gets their preferred song, identity should not be disclosed together with personality.

- 54. Explanations overview Problems: Understandability, Reliance Three levels: user, context, self-actualization When to explain: • Adapting to context e.g., activity, surprising content, group; • Adapting to users e.g., expertise, working memory 54

- 55. Thank you! n.tintarev@maastrichtuniversity.nl @navatintarev 55 Shabnam Najafian (PhD, started 2018) Tim Draws (PhD, started 2019) Alisa Rieger (PhD, started 2020) Emily Sullivan Post-doc (alumni) Mesut Kaya Post-doc (alumni) Oana Inel Post-doc Dimitrios Bountouridis Post-doc (alumni) HIRING: Assistant professor and post-docs! Your name here?

- 56. References • K. Balog, F. Radlinski, and S. Arakelyan. Transparent, Scrutable and Explainable User Models for Personalized Recommendation, 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’19), pages 265-274, July 2019. • O. Biran and C. Cotton. "Explanation and justification in machine learning: A survey." IJCAI-17 workshop on explainable AI (XAI). Vol. 8. No. 1. 2017. • F. Cerutti, N. Tintarev, and N. Oren. "Formal Arguments, Preferences, and Natural Language Interfaces to Humans: an Empirical Evaluation." ECAI. 2014. • Y. Jin, N. N. Htun, N. Tintarev, and K. Verbert. "Contextplay: user control for context-aware music recommendation". In ACM Conference on User Modeling and Adaptation and Personalization (UMAP). 2019. • Y. Jin, N. N.i Htun, N. Tintarev, and K. Verbert. "Effects of personal characteristics in control-oriented user interfaces for music recommender systems". User Modeling and User-Adapted Interaction, 2019. • H. Kaur, H. Nori, S. Jenkins, R. Caruana, H. Wallach, and J. W. Vaughan. Interpreting interpretability: Understanding data scientists' use of interpretability tools for machine learning. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, 1-14, 2020. • B. Kim, K. Rajiv, and O. O. Koyejo. “Examples are not enough, learn to criticize! Criticism for interpretability.” Advances in Neural Information Processing Systems (2016). • B. P. Knijnenburg and M. C. Willemsen. "Evaluating recommender systems with user experiments." Recommender Systems Handbook. Springer, Boston, MA, 2015. 309-352. • T. Miller. “Explanation in artificial intelligence: Insights from the social sciences.” arXiv Preprint arXiv:1706.07269. (2017). • C. Molnar "A guide for making black box models explainable." URL: https://christophm. github. io/interpretable-ml-book (2018). 56

- 57. References • Mulder, Mats. Master Thesis. "Multiperspectivity in online news: An analysis of how reading behaviour is affected by viewpoint diverse news recommendations and how they are presented." (2020). TU Delft 2020 http://resolver.tudelft.nl/uuid:7def1215- 5b30-4536-8b8f-15588e2703e6 • S. Najafian, O. Inel, and N. Tintarev. "Someone really wanted that song but it was not me! Evaluating Which Information to Disclose in Explanations for Group Recommendations." Proceedings of the 25th International Conference on Intelligent User Interfaces Companion. 2020. • Sullivan, Emily, et al. "Reading news with a purpose: Explaining user profiles for self-actualization." Adjunct Publication of the 27th Conference on User Modeling, Adaptation and Personalization. 2019. • N. Tintarev, S. Rostami, and B. Smyth. "Knowing the unknown: visualising consumption blind-spots in recommender system". In ACM Symposium On Applied Computing (SAC). 2018. • T. N. T. Tran, M. Atas, A. Felfernig, V. M. Le, R. Samer, and M. Stettinger. 2019. Towards Social Choice-based Explanations in Group Recommender Systems. In Proceedings of the 27th ACM Conference on User Modeling, Adaptation and Personalization (UMAP ‘19), 13–21. • N. Tintarev & J. Masthoff (2008, July). The effectiveness of personalized movie explanations: An experiment using commercial meta-data. In International Conference on Adaptive Hypermedia and Adaptive Web-Based Systems (pp. 204-213). • Yang, F., Huang, Z., Scholtz, J., Arendt, D.L.: How do visual explanations foster end users' appropriate trust in machine learning? In: Proceedings of the 25th International Conference on Intelligent User Interfaces. p. 189--201. IUI '20 57